In the previous post of our database series, we discussed the key decision points that helped us identify the design issues that we wanted to tackle at Woopra. These design decisions enabled us to develop a large-scale custom infrastructure that can handle billions of data points every month.

In this post, we discuss in detail the architecture of Woopra that allows us to manage the scale of user events that we track every day — all in real-time. We built a robust ecosystem that future-proofs our analytics from the anticipated growth in the number of users and user events that Woopra caters to at any given time.

What about Real-time Personalization?

Woopra is designed to track unique website and mobile app users and their activities such as signups, product usage, product engagement, content downloads, live chats and add to carts. To track all this activity, users of Woopra send event data to the system. Event data could arrive in any format in Woopra. This data must be transformed and restructured to make it accessible and actionable.

Most systems that process data on a large scale use a batch-oriented data processing paradigm. In batch processing systems, the continuously flowing data is first broken into chunks or “windows” of data, each of which is processed and committed to the database at periodic intervals and only after it is committed, can be queried when required.

While this may work in some cases, one can be at a serious disadvantage if they are trying to use this data to achieve real-time personalization.

Brands today want to create meaningful experiences for their customers in a context that is current and relevant. But how do they accomplish this? It involves establishing a good understanding of their customers through data and then delivering an omnichannel strategy to take the right action at the right time. They have a very short window of opportunity to enable an action before their customers move on to something else.

For example, consider a case where the VP of Product Design at a consumer electronics company looks up flights to Las Vegas in December of every year to attend the CES conference in January. While we know this pattern, we want to be able to send her a personalized promotional offer when she is on the website looking for deals on flights, and not 15 minutes later, at which point she might have already booked her flight with some other commercial airline ticket vendor. In such cases, the data loses its value almost right away. Hence, you want to be able to analyze and act on it immediately.

Stream Processing

If you need to achieve next-to-immediate analysis and act on incoming data, the batch processing model is all but useless. To address this, we built Woopra on a stream processing model.

When processing data in a stream, there is no windowing of data and if it takes, for example, 40 milliseconds, to process one incoming event, then that event will be queryable in 40 milliseconds. If it was windowed as it is in batch processing to say 1000 events in a group being processed at a time, usually none of the events in that window will be queryable until the final one is processed. Similarly, if it was windowed to 15-minute batches, the system will have to wait a full 15 minutes to process a given batch of events.

In contrast to batch processing, stream processing takes the tracking event data and continuously processes it as the data flows through the system. Real-time streaming systems are time-consuming to build and require significant system-level architecture and engineering. However, as a result of being engineered for stream processing, the Woopra system can receive an event, and within a few hundred milliseconds — even before the data is committed — evaluate it as the latest in a history of actions performed by a user.

Moreover, once it receives the event, it is also able to determine if this action is the catalyst that makes the user match a user segment and can determine whether or not to fire a particular trigger in real time.

Triggers in Woopra are actions that allow users to define a user segment and a set of action and visitor constraints that will set off the trigger. For example, triggers can be used to identify a returning visitor and display a call to action requesting them to join a newsletter. Or triggers can automatically generate a status report and send it to your team before your weekly meeting.

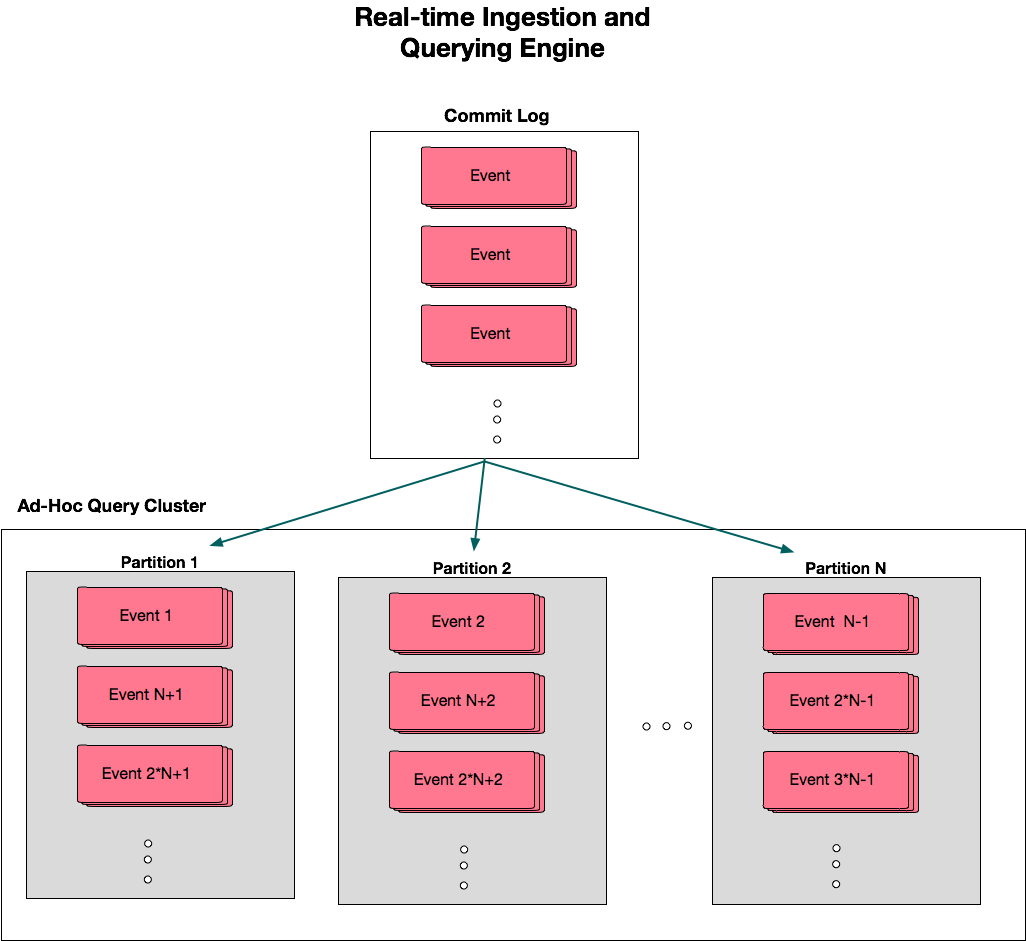

Real-time Ingestion and Querying Engine

At Woopra, we wanted to keep the latency of all our data processing to the minimum while ensuring the completeness and accuracy of the data. Regardless of the size or complexity of the data, the system’s reaction time to evaluate defined segments and run engagement triggers should be within the 100–200 milliseconds. We committed to this ambitious benchmark to make sure the system works seamlessly for real-time personalization use cases such as engaging with customers on a landing page or offering timely promotions during a peak holiday season.

The real-time ingestion and querying engine appends the incoming stream of events to the tail of the commit log. At the same time, the data is partitioned and distributed dynamically across several network nodes.

The data is then ready for ad-hoc querying and actionability. The events are ingested in a separate process, so a spike in traffic will not overload the system I/O, and will gracefully delay the real-time response time.

This enables Woopra to run ad-hoc queries all the time, without committing data in batches, or pre-building customer analytics reports.

Ad-hoc Query Cluster

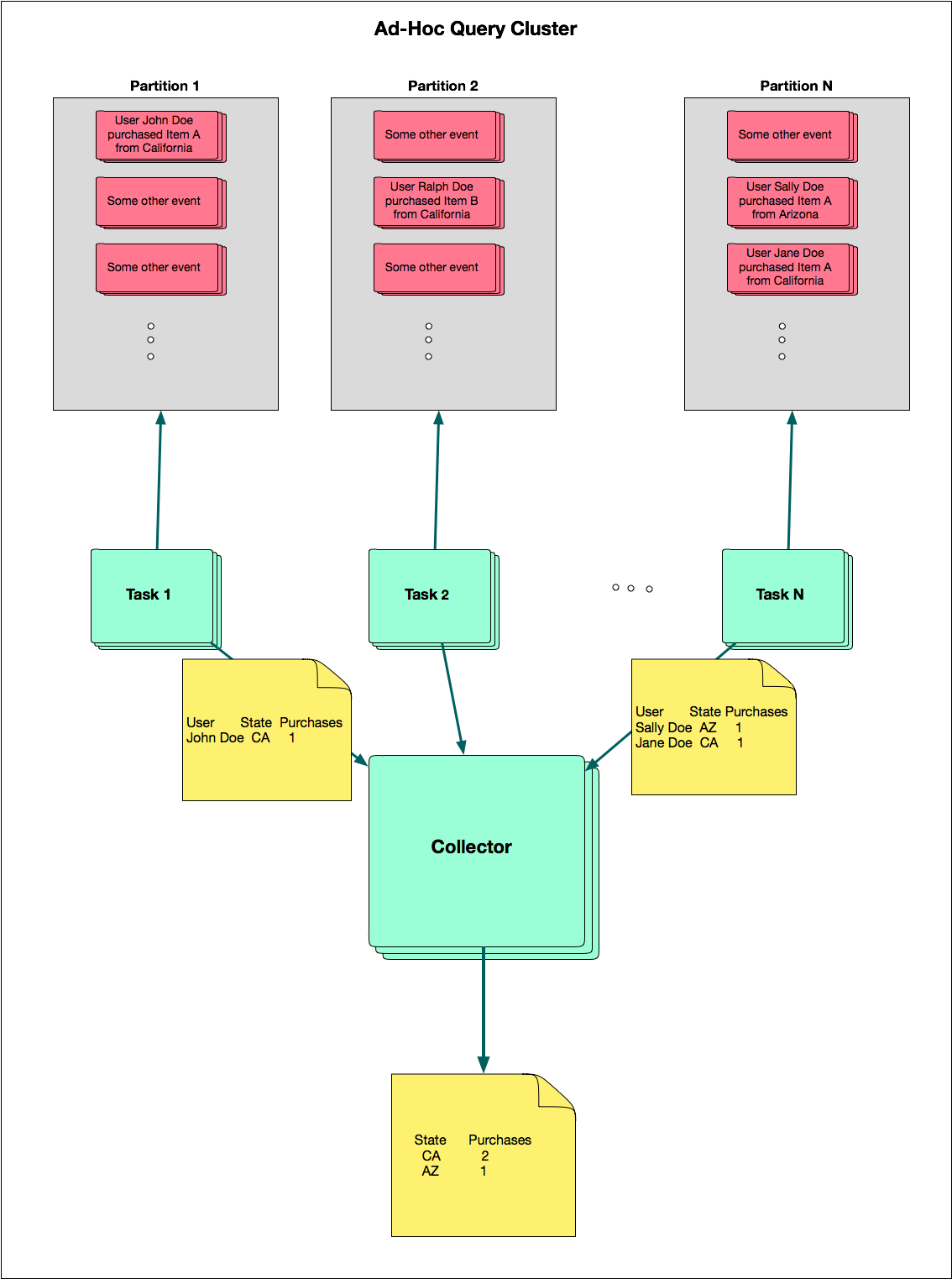

Let’s say you’re an eCommerce company and you want to understand how well a certain item “A” sells in California. The Ad-hoc query cluster will compile a simple JSON query into several machine-code tasks that would each run on the corresponding network partition.

All activity from John Doe is going to be located on a pre-determined partition where his information already exists. This is based on hash function logic that partitions the data in a way that all the data about a single customer is always located on the same partition.

For the sake of simplicity, for now, we’ll ignore several possible issues that might occur with this process such as deleted profiles, merged profiles and event timestamps (these will be covered in a separate blog).

In this example, John Doe purchasing item “A” in California runs on partition 1. By contrast, similar purchases made by Sally Doe in Arizona and Jane Doe in California run on a separate partition.

Then, “N” tasks will be created and scheduled on “N” partitions. So the task on partition 1 is created to process the transactions made by John Doe (purchase of the item “A”). At the same time, a task on partition “N” is created to process the transactions made by Sally Doe and Jane Doe.

Each task will then automatically collect the required data and generate columns for only the relevant fields. In this case, those fields are user, state and *purchases *since we only need to find how well item “A” sells in California. Note that the task on partition 2 does not return anything as Ralph Doe did not purchase item “A”. Subsequently, it runs the report on this subset of data that aggregates the purchases of item “A” by state, then relays the final response back to a master node for collection.

In the use cases where personalization or just-in-time marketing is necessary, this can be a game changer!

Woopra can provide insights that are truly real-time, allowing our customers to get massive competitive advantages, saving time and cost while giving them tremendous monetary benefits. A number of our customers implement similar use cases like the one above, resulting in significant increase in revenues and a much better customer experience.

Most recently, a leading budget airline customer of Woopra leveraged the real-time streaming ability to send personalized emails to visitors that look for the same deal 4 times in a row. The fourth time, they received a promotional email ( — save 20% if you book today!) while they were browsing the website for deals. The promotion resulted in a noticeable increase in sales for the company.

Creating a New Data Format

Woopra tracks events in a way that the users can identify customer behavior to address their specific business use case. These user-generated events have thousands of data points that, if stored in a compact format, can significantly improve the performance of the queries.

To accomplish the 100–200 millisecond trigger time, we had to store our data in efficient formats to reduce the file size and increase the speed of processing a query.

Not satisfied with the existing data formats such as JSON or MessagePack, we designed our own data format and we called it Flat Map or FlaM!

FlaM is significantly faster than other common formats as it is even more compact and can be stored easily in flat files. The FlaM event logs are central to the Woopra database and allow our customers to send us millions of data points without having to worry about processing speeds.

FlaM event logs are flexible and do not require any pre-set schemas. These event logs are stored in simple files that are structured only transactionally. Breaking the data into columns — so that a query that needs to read, for example, the top-selling items, will only scan the item_price data and ignores the rest of the data — is done at query time, not at commit time. This allows for an entirely schema-less data store while maintaining low latency and efficient I/O, even for the most complex queries.

Source of Truth

The FlaM logs are the core of Woopra’s multi-system database. They are the source of truth, which means that they alone contain all of the data sent to Woopra, and they are used to populate the data structures in every other database layer. The FlaM logs are the ultimate authority on what is “true” in the data.

But the Woopra database is much more than the FlaM log files. It is a multi-system database with different systems storing different data or the same data in a different way.

As a whole, the Woopra database system is comprised of many other layers responsible for various aspects of committing and querying data as efficiently and accurately as possible in a streaming, real-time pipeline.

Conclusion

The Woopra team is committed to creating the most seamless experience for our users so that they can rely on Woopra to be their one-stop analytics tool and ask any question they want of their customer data. This powerful real-time infrastructure enables integrations with dozens of third-party applications that allow users to cater to the analytics needs of their entire organization.

In 2018 and beyond, we will continue to build exciting new features for our user community. This highly efficient system architecture provides us with the robustness to expand our horizons and provide even more value to our users all over the world.

Learn more about customer journey analytics, product analytics, and customer analytics.