Not many marketers today will argue against the value of being “data-driven.” But what that specifically means is always under question. And despite the “data-driven” virtue being constantly extolled in blog posts, conferences, and boardrooms, many of us operate largely using gut-feel.

But it’s easy to use data to make decisions. The tools are here to do so. And while we understand the value of traditional session-based web analytics, as well as the value of customer analytics like Woopra, we can also combine qualitative insights to really get a grasp on user behavior and customer expectations.

So this piece will contain three parts.

We’ll lay the foundation with your quantitative data collection methods.

We’ll layer on some qualitative feedback to get a better understand of how you can improve the user experience.

We’ll talk about combining the two to come up with hypotheses for experiments and improvements to the customer experience.

Quantitative User Data: Laying the Foundation

I come from a marketing and conversion rate optimization background, so my preferred day is spent in an analytics platform analyzing customer behavior. Also, keep in mind that my reference point is that of a marketer, but quantitative behavioral data also helps sales, service, and customer success employees do their job better.

There are so many tools available to do this today. The important thing is that you configure an analytics setup to collect information aligned with your business goals. This could be measuring the amount of users reaching a particular goal on your site, how many are using a set of features, or how much time they spend looking at your knowledge base articles.

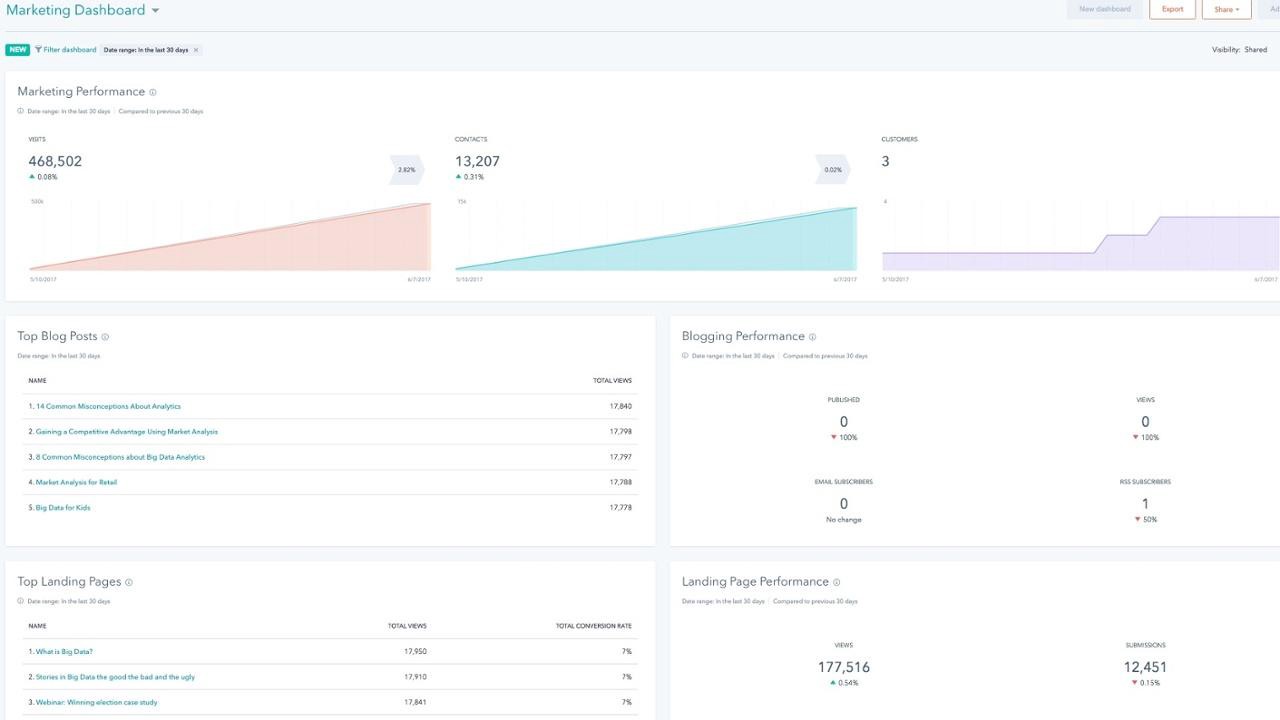

In addition, you can often find pretty solid quantitative insights in your CMS or ecommerce platform. At HubSpot, we have a variety of reporting features to bridge the marketing analytics and CRM divide. When you centralize data in this way, you can really uncover insights across the whole customer journey, from initial website contact all the way through the sale and more.

Similarly, with Woopra, you can combine this essential HubSpot engagement data with product engagement, mobile, support, NPS, chat and more to understand and optimize every touchpoint in the customer journey.

For example, let’s say you want to see if a marketing campaign launched around a new product feature had an impact on NPS score. You’ll want to look at email engagement, product data and NPS scores given after the campaign to understand if there’s a correlation.

Using a tool like Woopra, coupled with their one-click integrations to HubSpot and the NPS tool, Delighted, these questions can be answered in a matter of moments.

For example, check out the Woopra Customer Journey report below. Here, I’m looking at everyone in the last 90 days, who has opened our HubSpot email referencing the feature, has engaged with the feature and then went on to leave a positive NPS score!

See how you can customize the timeframe, email subject, type of product feature and NPS score? This gives you flexibility to find the exact audience you’re looking to learn from. Adding in other elements such as churn, support tickets opened, etc. allows you to expand even further to answer any question of your data.

With the ability to compare data points across tools and teams, you gain incredible insight into how marketing campaigns impact other elements of the business to improve the customer experience across the organization. This information can then be leveraged to, for example, fuel personalized campaigns or optimize those that are delivering your best customer types.

Whatever tool you use, I like to start with business questions and work my way into analysis. Some common questions I like to ask when reviewing user behavior include:

What site pages are most important to my business?

Which landing pages are underperforming compared to the rest of them? Are any performing exceptionally well compared to the average?

Are there specific browsers or devices that are underperforming?

What’s the biggest drop-off point in the goal funnel? Is it more or less extreme in specific user segments?

Which traffic sources are performing better or worse than average?

Is site speed and loading time affecting my conversion rate or user behavior?

How does churn look over time and within subsets of users?

Is our configuration even properly setup to capture the right information? How can we improve our setup to get a better picture of our users?

That’s a short and incomplete list of business questions, most of which are probably most important in a CRO context, but hopefully they’ll give you inspiration to start asking your own questions of your data.

Other tools for quantifying user behavior include form analytics, click heatmaps, and eye tracking. These are usually used (and should be) as a supplement to more robust user tracking solutions.

The important summary here: use passively collected behavioral data to find out what users are actually doing and to uncover potential issues and opportunity areas. Mark them down and you can begin further investigation with qualitative data.

Qualitative User Feedback: Finding the Why

Qualitative data is underrated.

First, at earlier stages, companies really can’t be “data-driven” in the context of quantitative data. You simply don’t have the quantity to run hundreds of A/B tests at this point. You simply need to talk to customers and get as much qualitative data as you can.

Second, customer feedback and attitudinal data can be a wonderful complement to quantitative data. You can find out what the problem is with quantitative data, but you can tease out the why with qualitative data.

Third, customer feedback multiplies in value when you share it with different teams. For instance, in-product feedback can be used by sales teams to set expectations, by marketing teams to craft value propositions and more accurate campaigns, and of course by product and customer success teams to build features and optimizing the early customer experience, respectively.

There are tons of methods for collecting qualitative data nowadays. Here’s just a short list:

Customer interviews

Focus groups

In-app or on-site surveys

Email surveys

User testing

Session replays

There’s a bit of a split between “active” and “passive” qualitative data collection. Customer interviews, user testing, and email surveys are all “active,” — you have to ask or engage with a user in order to elicit information. This has pros and cons, as you can certainly collect voice of customer data, but you also risk biasing the responses due to observer bias.

Then, something like session replays is a “passive” form of data collections. Users simply interact with your site or app in an ordinary way, unaware of their anonymized user behavior patterns being collected. This has the main benefit of getting a true picture of unaffected user behavior.

It’s best to combine active and passive qualitative data, or at least be aware of when to use each depending on what your goals are.

Combining Things and Testing Hypotheses

It’s not enough to simply collect data. You need to act on it.

While the specific may differ based on your use case, there is one commonality: you need to prioritize your findings in some meaningful way.

As I mentioned, I come from a conversion optimization background, in collecting user data, we’re largely looking to inform A/B test hypotheses. Luckily, in this endeavor, there’s already been a ton of work done, and there are a few popular acronym-based prioritization frameworks available:

All of them are slightly different, but really come down to two important variables: impact and costs. It’s an ROI equation. How much value can we expect and how much do we need to pay in terms of resources, uncertainty, time, or opportunity costs?

While I’m largely used to using prioritization frameworks for A/B testing, you can use the same frameworks to build a product management roadmap, experiment with new customer education initiatives, or even with new sales prospecting methods.

The general process I would use in any scenario is to first look at your notes on opportunity areas discovered through quantitative data and then combine a related explanatory insight with qualitative data. Through interpreting these together, you can usually reach a few ideas on how to act on the data.

Perhaps one finding is that mobile users are dropping off rapidly on your ecommerce cart page. If you have any quantitative insights that support this, such as technical issues found on something like BrowserStack, then you can add that as well.

Then, align your qualitative insights with this opportunity area. Perhaps you’ve discovered through user testing and session replays that your “Enter Coupon” button is too overpowering and is causing users to abandon your site in search of coupon codes.

These two insights together can form a hypothesis that, if you reduce the prominence of your coupon form field and place a higher prominence on your “continue to checkout” button, more people will continue to checkout and purchase. You can have many possible iterations of the solution, but you’ve honed in on a problem area and have some insights on how to solve it.

That’s one row on your prioritization spreadsheet, and you need to rank it based on whatever variables are important to you (impact, ease, etc.)

The end result should be a prioritized list of opportunity areas that can inform your product, marketing, sales, or experience roadmap.

Conclusion

Most people want to be “data-driven,” but it’s somewhat ambiguous on how to do so. Looking at traffic reports and counting sessions per week doesn’t cut it anymore; You need to build feedback loops to collect both quantitative and qualitative data.

Moreover, you need to act on your data. If it lies dormant, it’s better off not collected in the first place (after all, there’s a cost to data collection in terms of time, software, and human resources).

So, whether you’re running A/B tests on an ecommerce site, optimizing a user onboarding experience, or building out a sales development organization and optimizing your approach, you can use quantitative and qualitative insights to improve your efforts.

The process is simple: collect, analyze, hypothesize, experiment or implement, and repeat.

](https://media.woopra.com/image/upload/v1597183984/0%2AEYvhbAszMFUydH-l.jpg)

](https://media.woopra.com/image/upload/v1597183985/0%2AK2wVkEPOQgHoJvC3.jpg)